Publications

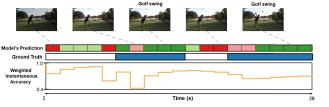

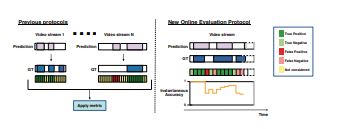

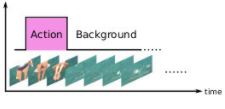

Rethinking Online Action Detection in Untrimmed Videos: A Novel Online Evaluation Protocol

M. Baptista-Ríos, R. J. López-Sastre, F. Caba Heilbron, Jan van Gemert, F. J. Acevedo-Rodríguez, S. Maldonado-Bascón.

IEEE Access, 2020. ![]() Code

Code

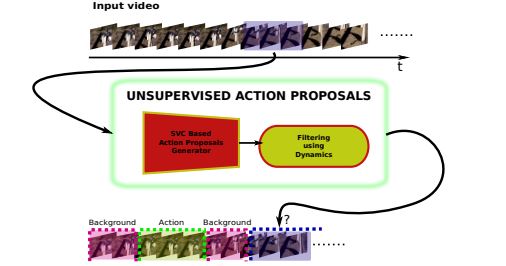

Unsupervised Action Proposals Using Support Vector Classifiers for Online Video Processing

M. Baptista-Ríos, R. J. López-Sastre, F. J. Acevedo-Rodríguez, P. Martín-Martín, S. Maldonado-Bascón.

Sensors, 2020. ![]() Code

Code

The Instantaneous Accuracy: a Novel Metric for the Problem of Online Human Behaviour Recognition in Untrimmed Videos

M. Baptista-Ríos, R. J. López-Sastre, F. Caba Heilbron, Jan van Gemert, F. J. Acevedo-Rodríguez, S. Maldonado-Bascón.

10th International Workshop on Human Bevaviour Undertstanding, ICCV, 2019. ![]() Code

Code

Fallen People Detection Capabilities Using Assistive Robot

S. Maldonado-Bascón, C. Iglesias-Iglesias, P. Martín-Martín, S. Lafuente-Arroyo.

Electronics, 2019. ![]() Data

Data

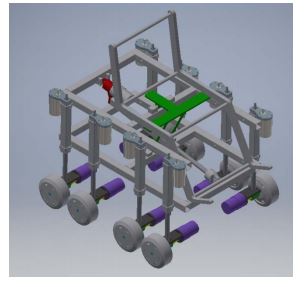

A Novel Approach for a Leg-Based Stair-Climbing Wheelchair based on Electrical Linear Actuators

E. Pereira, H. Gómez-Moreno, C. Alen-Cordero, P. Gil-Jiménez, S. Maldonado-Bascón.

Segmentation in Corridor Environments: Combining floor and ceiling detection

S. Lafuente-Arroyo, S. Maldonado-Bascón, H. Gómez-Moreno, C. Alen-Cordero.

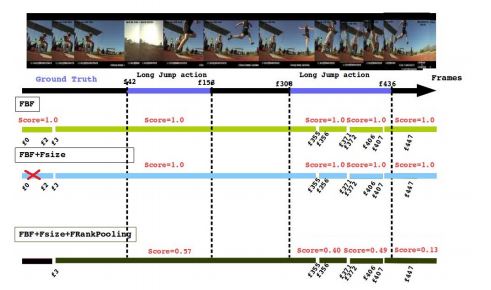

Combining Online Clustering and Rank Pooling Dynamics for Action Proposals

Nadjia Khatir, Roberto J. López-Sastre, Marcos Baptista-Ríos, Safia Nait-Bahloul, Francisco Javier Acevedo-Rodríguez.

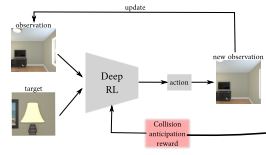

Collision anticipation via deep reinforcement learning for visual navigation

E. Gutiérrez-Maestro, R. J. López-Sastre, S. Maldonado-Bascón.

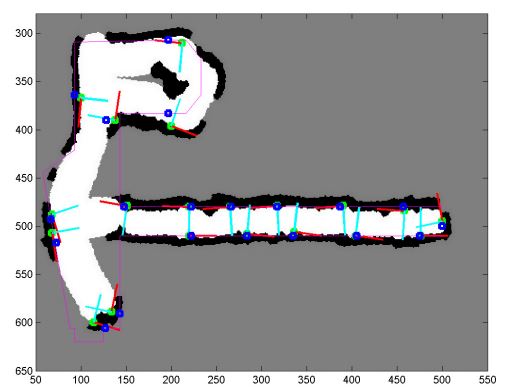

On-Board Correction of Systematic Odometry Errors in Differential Robots

S. Maldonado-Bascón, R. J. López-Sastre, F. J. Acevedo-Rodríguez, P. Gil-Jiménez.

Journal of Sensors, 2019. ![]() Data

Data

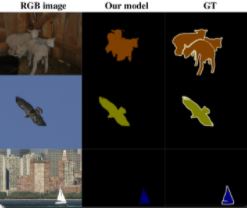

Learning to Exploit the Prior Network Knowledge for Weakly-Supervised Semantic Segmentation

C. Redondo-Cabrera, M. Baptista-Ríos, R. J. López-Sastre.

IEEE Transactions on Image Processing, 2019 ![]() Code

Code

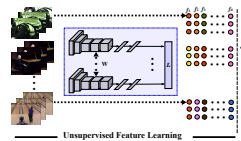

Unsupervised learning from videos using temporal coherency deep networks

C. Redondo-Cabrera and R. J. López-Sastre.

Computer Vision and Image Understanding, 2019. ![]() Code

Code

Embarrassingly Simple Model for Early Action Proposal

M. Baptista-Ríos, R. J. López-Sastre, F. J. Acevedo-Rodríguez, S. Maldonado-Bascón.

The challenge of simultaneous object detection and pose estimation: a comparative study

D. Oñoro-Rubio, R. J. López-Sastre, C. Redondo-Cabrera, P. Gil-Jiménez.

Image and Vision Computing, 2018. ![]() Code

Code

In pixels we trust: From Pixel Labeling to Object Localization and Scene Categorization

C. Herránz-Perdigureo, C. Redondo-Cabrera and R. J. López-Sastre.